What is 1337NET?

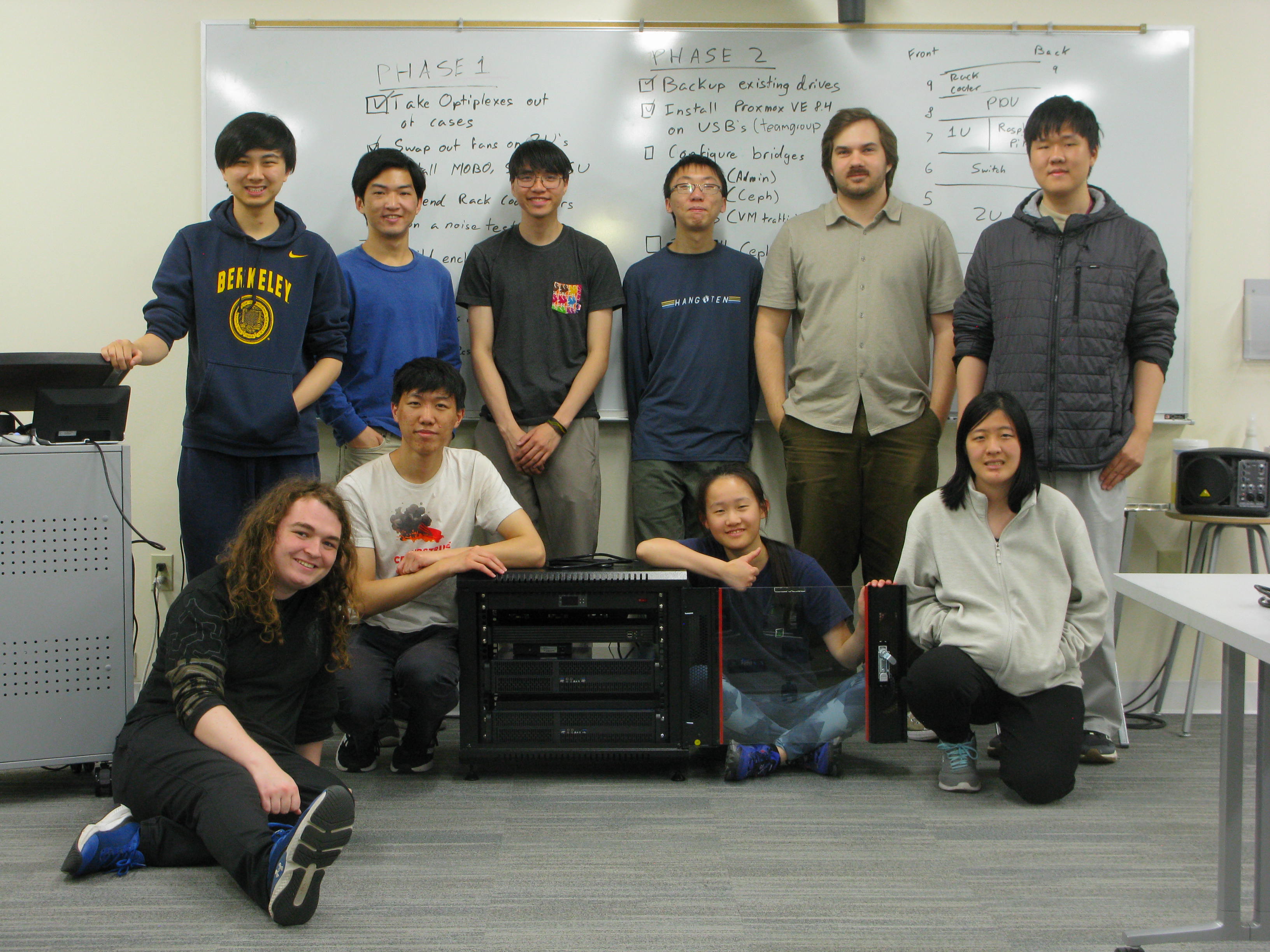

Bottom (L -> R): Owen, Matthew, Anita, Liz.

Since BERKE1337, my cybersecurity club, here at UC Berkeley has been doing rather well in traditional CTFs, I wanted to pivot focus onto a different category of cybersecurity challenges, namely network defense. This started some time in December/January where we were working towards competing in the Collegiate Cybersecurity Defense Competition (CCDC). Our coach at the time, Nick Merrill of the CLTC, was able to spot us some funding from the CLTC in order to purchase equipment for our lab, a small donation which was able to jump start much of our work in creating a virtual environment which we could use to practice and familiarize ourselves with network defense topics. This was the inception of 1337NET.

At the time we didn't have much in terms of space to install our equipment on campus. My goal was always for this to be a project which could be passed down from generation to generation of students in the club, something everyone could turn to and view as the thing which represented our work as a cybersecurity club. Hence, I spent the next couple months planning the expansion of the build and the hardware it would include. The restrictions we balanced with space, heat, cost, everything was in consideration. Eventually, we were approved and we could make the purchases. Finally, many months later, we had a social where we brought all the parts up to our club room to install the equipment in a small server rack.

1337NET is BERKE1337's lab network for practical cyberdefense exercises. It's a culmination of our officers and general member's efforts to improve our skills and show others what we can do. It's my crowning achievement as a systems administrator and IT engineer to produce something of this scale. Seven machines, countless virtual machines, a switch, some Raspberry Pi's, and the motivation from my peers to get this done. That's what it represents and that is what it means to me. As the network's first systems administrator, I want to make sure that when I graduate at the end of 2025, that this network persists and lasts for as long as there are people who find it useful, for our club to use to make our mark.

Ok, the sappy stuff is out of the way. More technical stuff ahead.

"I want technical details that will put me to sleep."

I'm so glad to hear this. I've spent far too much time thinking about this project for it to just sit in my brain.

Originally, our version 0 of the network which was funded in part by the CLTC ran straight out of my apartment. Where else was I going to put it? No clue, guess it was a good place for it to live for a while. It was three Raspberry Pi 4B's, one of which was donated by a club member, a PoE switch, and two Dell Optiplex 9020's which were saved from the trash can by our club president. In total, the six devices which were the core of our setup were named 1337-LNX1/2/3 and 1337-WND1/2, running a variety of Linux and Windows operating systems. I was able to use my existing infrastructure and ODROID router to act as a VPN server for the 1337NET committee to securely access the machines. I even ran a database for their users extending their centralized access to other features as well as easier migration later down the road.

Now everything fits snuggly into an office space adjacent to our club room, which isn't even our club room, just a shared office we have permission to use after all the adults have left. I always told people that it would take a day to build and weeks to configure. It shouldn't come as surprise that even now I am still nailing down the configuration. Working on features which include redundancy, failover, and high availability poses some interesting challenges, many of which I haven't encountered even in my own home setup. The use of virtualization is really a brand-new field for me as my own home server only uses containerization. Proxmox makes this process slightly less painful, but it has its short-comings which sometimes have to be solved by just going back into the terminal.

Our first goal was persistence-- I don't want to have to come up to the office to do everything all the time. Luckily we got a static IP address so networking was already a bit easier from the get-go. A couple days working out the MAC spoofing, working a virtual router, and only a handful of lockouts, we managed to get a VPN server running. From there, it was establishing secure communication from device to device. Although it's all under the umbrella of our servers, treating every device like it's a threat is actually not the worst kind of paranoia you can have. Making sure the VLANs were in-place, segregating the traffic into it's appropriate networks made it so snooping couldn't happen. With so many physical and virtual devices, we want to account for the inevitable gaps in security by making sure each device is locked down and tailored to it's particular use. At least, that is the goal.

"Ok, talk about the extraordinary hardware you're working with!"

If you missed it earlier, then I'll say it again. This setup is the prime definition of JANK. I think if I showed a photo of the cable situation we have going on behind the rack then I would have to add a mature content warning at the beginning of the post. It looks like an amateur shibari project gone wrong, and it would be solved if I decided to crimp the cables, but even then we're not even using a mounted switch plus we're doing some wonderful front-back back-front rack mounting, meaning the I/O is only available from the center of the rack. There's a severe lack of I/O shields too as our Optiplexes have proprietary form factors (coining us the term Proprietary Dell Bullshit^TM). On that point, we had to purchase two sets of adapters for both the fans (which were 5-pin) and the PSU (Dell power's their boards with an 8-pin header). Further, the BIOS complains that certain things are unplugged and literally cannot progress to the bootloader unless someone presses F1 to continue past that screen. Aside from that, our server setup is reboot safe and will almost entirely power back on in the event of a power failure.

On the flipside, our head server, Fishburne, named after Morpheus's actor in The Matrix Laurence Fishburne, is a wonderful device. It's an upgrade from the routing device I run on my home network. We run an ODROID-H4 Ultra, the very spiffy and fast mini PC which we've mounted to a mini-ITX chassis using Hardkernel's Mini-ITX case adapter. This thing is awesome, such a neat piece of hardware in such a small form factor, I really can't complain.

We're using a Sysracks 9U server enclosure to house all our equipment. I didn't personally assemble it on Build Day, but I kept sending people over to it so it could be built. Even with the extra help that team had, it took almost the entire session to construct it. Good on all of you, I know it was tough. This thing is neat because it has all these doors on it which make managing the rack-mounted equipment a lot easier compared to if we just had front-back access. It also came with an insane 24v fan which plugged directly into the PDU. We're not using it anymore but I'm betting that if we did, our setup might've just lifted off the ground ever so slightly. Instead, we're using a (once again) janky 4-fan system cooler mounted at the top of our setup. The packaging it came it was so bad. It featured four small pieces of styrofoam which resulted in the ears needing to be bent back into place with a long screw driver. It's loud, but only really turns on if things literally hit the fan. Its temperature sensor checks what the air is like behind the rack before it kicks in.

I didn't really account for the sag the chassis create on themselves so we had to come up with some inventive ways to keep the chassis from stressing their ears. The Sysracks enclosure came with a shelf which we mounted at the very bottom of the enclosure, using a spare set of nuts and bolts for it to rest on since it wasn't actually deep enough to reach across the enclosure front to back. This was to hold up the 2U at the bottom of the rack. The second 2U above that, we used an anti-sag rod like the one's you use for GPUs to hold it up. Everything else above that is a lot lighter and doesn't sag nearly as bad.

Our network bandwidth internal and externally leaves much to be desired. We have some severe bottlenecks which will definitely have to be addressed sometime down the road when we need some more throughput. First and foremost, our connection coming from the ethernet port on the wall is limited to a measly 110mb/s. Pitiful, really. At first we were using a Cat5 cable which I thought was causing the lower throughput but even after switching to a Cat6, our bandwidth did not increase. This sucks for a lot of reasons, but it doesn't stop there. Most of our RJ45 ports on the devices are running 2.5g (Our switch, the ODROID), but all our end devices such as the Pi's and the Optiplexes are limited to 1g. Again, not the worst, but also suboptimal. What really sucks is the limited number of interfaces for all our network needs. I would've liked to have separate interfaces for all three of our networks (Management, Ceph, and VirtualMachines VLAN), but we've had to resort to extending the single interfaces and then tacking on virtual bridges for network segregation. As I've come to find, writing static interface tables for this is a precarious thing as I've come very close to losing connection to machines only a few too many times. Thankfully, since all the machines are Debian based, a change to one config can be copied over to another. I'm once again thankful that we have a powerful head node Fishburne which runs our router dealing with all this networking overhead.

"But you are using cutting edge software, the best money can buy?"

I had a dream that this setup printed money, but when I woke up I realized it only really does the opposite right now. Because it's Berkeley, and because it's "on-brand," we're using entirely open-source software. We may not have the money to make things easy, but we sure do have the time to configure all this software so it works the way we want it to.

I think that using open-source software achieves a different kind of freedom than having infinite money does. Hard times make tough people, and instead of having everything "just work", we get to spend time learning the intricacies of how software works, how different protocols and mechanisms interact with each other. I personally find it pretty satisfying being able to fine-tune my software and feel equally as satisfied when it all works in the end, even if it takes a day to do. Ultimately, it's cheaper in the long-run, and setting it up this way now instead of transitioning to it after we've run out of money makes it that much more worth it.

As I've mentioned before, we run Proxmox as our virtualization platform, and we leverage many of the tools it integrates with. Our storage is handled by our Optiplexes named Chong and Parker. Since they're in 2U chassis they actually have some drive bays where we throw the drives into, and those drives become connected via Ceph. This offers redundancy and failover support, as well as VM and container migration should either Chong or Parker go down. It works decently well, and so far I've been able to setup our central authentication with Samba AD DC so user's accounts work across the network and on hosts, which is really the shining point of the network thus far. We run a multi-master setup and user's only have to register with Samba to have access to literally all the rest of the services we run (more on this soon). Really nice quality of life and infinitely easier to use and more extensible than just using OpenLDAP like we did in the previous version of the lab. As I use OPNsense on my homelab, that's what we're using as our router, I personally really like it. We also run our own DNS, NTP, and OpenVPN servers for various levels of management access. Once again, feel free to check out the lab diagram to see fully what we're running.

One of the key points which really pleases me on this network is the emphasis on identity and authenticity. We run a keyserver which allows people to easily synchronize every member's public keys, offering ways to verify git commits, integrate with XMPP, and verify emails and messages. This is awesome, and I wouldn't be lying if I didn't think this is over the top. Once again, my response is that it is "on-brand" and leverages long-standing mechanisms which are employed in many professional environments today. I'm overall pleased with how flexible these existing services work with each other and highlights one of the things I appreciate the most in the open-source community, which is the emphasis on maintainability for tried and tested services and their integration with each other. I only hope that one day I'll be able to create or contribute to a piece of software which has such as long-lasting impact as the ones we use on the network.

"So who's gonna use it all?"

Hopefully the club will! I foresee this as our development network where we run training programs and develop programs, tools, and guides to use throughout our journey and in competitions. In the long-term, I would totally want this to also be a learning platform which anyone can log into and practice doing things. As a security-focused club, I think moving towards a non-proprietary service for everything is the way to go. When we control our servers and services, we can rest assured that nothing nefarious is going on. Discord, Git, and other services which are so popular are so corporate as of late, and filled with advertisements. It's even more insulting when you pay for any of these services and you STILL get ads. For that reason, self-hosting our critical services is very necessary and gives us more identity.

I expect that by the end of the summer and next semester, I'll have a nice way to register users seamlessly and they'll be able to use our services easily. Hoping someone holds me accountable for this, but something tells me I'll be able to do it anyways.

One of the points I would like to make is that I designed this lab network to build community across other cybersecurity clubs as well. Leave it to Berkeley to be the builders of a network, it's in our nature. We decided to go with XMPP as our main chat server because of it's ability to federate with other networks. I imagine being able to host a guide which another cybersecurity club in another school can follow to bring up their own XMPP server and have it federate with ours, joining their user-base with ours without the need for having a centralized authenticity; make it distributed. XMPP also has the added fact that it is built on XML, which results in less overhead and processing power spent on federation synchronization, something which current Matrix servers struggle with and the primary reason we're not running that. Another boon for Samba is that many of the user details registered on that platform automatically sync across services, think username, profile, attached email, full name, everything.

Something I would want the network to be used for is the CLTC or even the UC Berkeley Cybersecurity clinic to aid in their mission, as well as save them some money on hosting costs. Naturally, we would provide those services at a cost but make it less than what they would get from another vendor. If you missed it, those were my plans on monetization, getting a steady balance added to our club's account.

If you've got to the end of this blog, then I'm sure you're at least interested in what I have to say next. This is my formal invitation to you, the reader, to join my 1337NET Committee, a group within BERKE1337 which is responsible for maintaining the network and achieving any number of goals I've listed up until this point and beyond. This is a pretty decent undertaking, but it builds great experience and is, in my opinion, one of the best ways you can gain experience with IT and cybersecurity best practices.

If you're not a student at UC Berkeley and someone who is in industry, I would also kindly ask you send me a message so we can connect. I am in the job market looking for engineering, IT, cybersecurity, and SOC roles for new grads. Hopefully the skills displayed with this project are enough to pique your interest. Send me an email at kinn.edendev@gmail.com and we can chat further.

Thank you very much for visiting my site and reading my blog! You can read more of my blog posts here and you can contact me through my social medias found on my contacts page.